Why Byte Arrays Are A Bad Idea When Dealing With Large Data

07/12/2022Hello, and welcome to my blog post for December 7th of the C# Advent Calendar 2022.

Organised by Matthew Groves & Calvin Allen.

I wont tell you about me, but if you are interested, check out my About me page.

Why Byte Arrays Are A Bad Idea When Dealing With Large Data

First off, I want to detail a win: The changes I am about to describe saved 5GB of RAM from a total of 6GB when dealing with a 350MB file.

Yes, you read that right! That is an 83% reduction in memory usage!

Second, let me put some context in place:

- The software I am talking about is responsible for reading a proprietary file format holding raw audio data & a bunch of headers per "frame".

- There can be hundreds of thousands of frames dependant on the length of the audio stream recorded.

Now lets look at an example of a 150min (2.5 hour) recording:

- The WAV formatted file on disk that generated this audio originally was 280MB.

- The software needed 5GB (yes that is not a typo! It says 5 GIGABYTES!!) of memory to process the proprietary file.

The difference in file size between the WAV and the proprietary files are not significant, say 10%, so why are we using 18 times the amount of memory to process a file?

Investigation

We deal with Mono and Stereo audio differently (we support additional channels too, but lets keep it simple), and "up-sample"(?) the Mono audio to Stereo, so that explains some of the difference.

So we can consider the original file size as a 600MB file to accommodate the up-sample.

Right, so now we are only using 8 times more memory than the file on the disk!

Ok, Ok, I hear you all saying But what has any of this got to do with the topic of the article?

Well for those who have not guessed, the data was being read into memory and manipulated there, using... ...Byte Arrays!

"But why does that use so much RAM: the file is 600MB, the Byte Array should be the same size" --- Correct, it is!

Don't run away, I will explain...

We use MemoryStream to walk around the Byte Array, to make it quick to access data within the array.

After much analysis of the code, it was determined, that multiple MemoryStreams were created to walk around each channel (Mono has 1 channel, Stereo has 2) of audio data.

By default a MemoryStream will create a Byte Array as its back-end storage, this is ok, as it will grow to fit the data within it (caveat about this later).

int sizeOfLeftChannel = 5000;

List<MemoryStream> channelContainer = new List<MemoryStream>();

MemoryStream leftChannel = new MemoryStream(sizeOfLeftChannel);

channelContainer.Add(leftChannel);

So what do you think happens when you go:

MemoryStream leftChannel = new MemoryStream(sizeOfLeftChannel);

Correct! It creates a Byte Array in the background of the size sizeOfLeftChannel.

Now if I do:

var leftBytes = channelContainer[0].ToArray();

What I now have is 2 Byte Arrays (the ToArray() created a new Byte Array), both 5,000bytes (sizeOfLeftChannel) in size, thus doubling my memory allocation.

We were doing this in a lot of places: to focus on channels of data, and to walk through them quickly.

So now we know where some of our memory is going, and we can limit this with some code rework, however, it does not explain all the memory allocation.

Lets continue the investigation...

Turns out, we are using some third party components (I am not allowed to tell you which) to do specific audio encoding, and they would pull the file into RAM (exactly as we did), convert to a MemoryStream, and then pull it out to a Byte Array to feed to the encoders.

These components were vital to the process, and cannot be changed, so lets check the documentation (as we always should) for any clues.

Turns out those encoders had alternative choices to encode (no clues yet what they are, all will be revealed soon!), thus reducing/removing the memory allocation.

Solution time

I have talked about what the problem is:

- Reading large amounts of data and manipulating it in memory.

- Which in hindsight is very obvious why we were consuming huge amounts of RAM!

But how can we resolve it?

We are reading data out and putting it into MemoryStream to consume it further.

Hang on a minute, there are other inbuilt Streams in dotnet, can we use one of those?

Why yes, of course: FileStream!

We are reading from a file to process in a stream in memory, why don't we just switch to reading the file directly instead. All our memory problems will be solved.

Yes, that is true, reworking the code to deal with FileStream instead of MemoryStream reduced our memory consumption dramatically!

We were no longer pulling everything into memory, and then processing it, we could read in what we needed, process it, and write it out to another stream with limited memory usage.

But that is going to be SLOW! I hear you shout.

Yes it was, we went from a 20 second conversion time to 3 minutes. OUCH!

Solution number 2

Now the code has been setup to use Stream everywhere, we can at least look at alternative implementations.

What options do we have in dotnet:

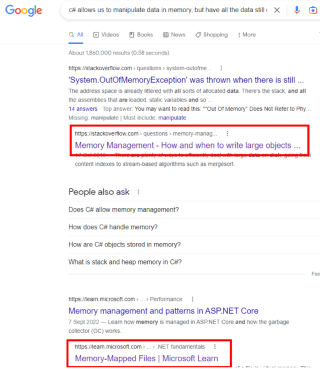

We have already ruled out MemoryStream and now FileStream. BufferedStream does not make sense in our use case - What we want is something that allows us to manipulate data in memory, but have all the data still on disk.

Right, time for more research - "To Google!", and lets try searching: "c# allow us to manipulate data in memory, but have all the data still on disk"

we get these 2 interesting results:

I have not heard of Memory-Mapped Files before, but they sound promising.

The way I interpret the documentation is that I can read part of a file into memory, manipulate it, and it be persisted. This sounds perfect, the best of both worlds.

A benefit because we are dealing with audio is, we can manipulate it in chunks, something like this (not real code):

//Read a reasonable chunk of the WAV file http://www-mmsp.ece.mcgill.ca/Documents/AudioFormats/WAVE/WAVE.html

const int encodeChunkSize = 1024;

for (int i = 0; i < inputStream.Length - encodeChunkSize; i += encodeChunkSize)

{

int bytesRead = inputStream.Read(waveFileFrames, 0, encodeChunkSize);

int bytesEncoded = encoder.EncodeBuffer(waveFileFrames, 0, encodeChunkSize, encodedFrames);

outputStream.Write(encodedFrames, 0, bytesEncoded);

}

This allows us to read in the file a bit at a time, encode it, and write it to a new file a bit at a time too. Memory-MappedFiles are definitely for us!

Due to the confidential nature of our code, I cannot share how the code actually works, but here are some snippets (not compilable code) which will help you figure out what you could do:

//Creates a new temporary file with write, non inheritable permissions which is deleted on close.

new FileStream(Path.Combine(Path.GetTempPath(), Path.GetRandomFileName()), FileMode.CreateNew, FileAccess.ReadWrite, FileShare.None, 4096, FileOptions.DeleteOnClose);

//Open a memory mapped file stream from an existing file

MemoryMappedFile.CreateFromFile(fileStream, null, 0, MemoryMappedFileAccess.ReadWrite, HandleInheritability.None, true);

//Create a Stream for a portion of the memory mapped file

Stream leftHandChannelPartialViewOfFileStream = leftChannelMemoryMappedFile.CreateViewStream(startIndexForViewStreamChannel, offsetLength, MemoryMappedFileAccess.Read);

Conclusion

So lets finally answer the question - "Why are Byte Arrays A Bad Idea When Dealing With Large Data?"

Simply put - They use a lot of memory!

That is not necessarily a bad thing, "IF" you can ensure your data is always small enough, and you don't copy it about endlessly.

However, there are other ways when dealing with large amounts of data, and that is to use some kind of "Stream", be it a FileStream or a MemoryMappedFile.

FileStreams are great, but can be very slow, so a Memory-Mapped file is a great alternative: We get access to a file directly in Memory, but as with all things, there are trade offs and gotchas (I will leave it to you Dear Reader to find out more, or come and chat on Twitter).

Finally, I mentioned earlier a caveat with a MemoryStream - These are backed by Byte Arrays and therefore are limited in size to 2GBs (on a 32Bit Process). Our process is currently, due to third party dependencies, limited to 32Bit.

.NET Framework only: By default, the maximum size of an Array is 2 gigabytes (GB). In a 64-bit environment, you can avoid the size restriction by setting the enabled attribute of the gcAllowVeryLargeObjects configuration element to true in the run-time environment.

If you got this far through my first C# Advent Calendar article, then thanks a lot, I appreciate your time.

Come say Hi on Twitter

Happy holidays everyone

Thank you for your time.

If you want to reach out, catch me on Twitter!