All Blog Posts

Newest posts are first.

Contents

- This Service Descriptor Is Keyed Your Service Provider May Not Support Keyed Services

- Make A Wireshark Capture File More Focussed

- Forcing Tests To Run In A Specific Order Using MSTest

- Upgrading From IdentityServer Version 2 To IdentityServer Version 4

- Docker Compose Scaling Gotcha Part 2

- Using ObjectDumper Breathed New Life Into A Complex System

- How To Prepare For The First Day Of A New Starter

- My Thoughts On Using GitHub CoPilot

- 50 Ways To Retain An Employee

- Recording Video From A Nintendo Switch

- Caching The Contents Of This Site

- Adding More Disk Space To Azure DevOps Agents

- Four Years Have Gone

- Querying Console Output In Your Tests

- Filtering A Pcap VoIP Recording To A Specific Call In Wireshark

- Playing A Captured VoIP Recording Through Wireshark

- Replaying Network Captures

- Explicitly Including A DLL In A Project

- Hosting A Large Number Of Participants In Microsoft Teams

- Dynamically Changing Docker Container Limits

- Docker Compose Parallel Gotcha

- Intermittent Playwright Exception

- Its Time To Talk About Lego

- Docker Compose Scaling Gotcha

- Three Years Have Gone

- Testing In Dotnet

- Why Byte Arrays Are A Bad Idea When Dealing With Large Data

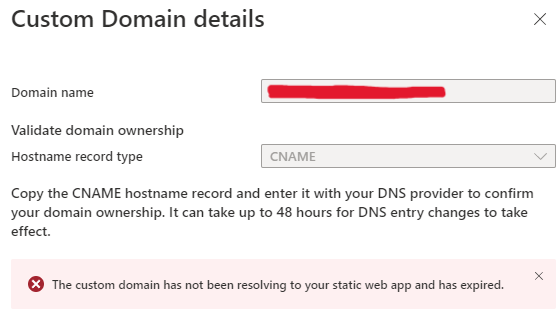

- Azure Static Web App Invalid SSL Certificate

- Reading UDP Packets With SharpPcap

- How To Confirm 2 Audio Files Are Identical

- Microsoft Teams Compliance Recording _ Part 2

- Microsoft Teams Compliance Recording

- February 2022 Lego Builds

- Meeting Timer For Microsoft Teams Zoom Google Meet

- Working With Ffmpeg

- Two Years Have Gone

- Summing Up 2021

- Sorting Lego

- Remote Meetings

- Remote Pair Programming

- Atlassian Products

- First Day At New Job Fail

- Different Staging Environments In Azure Static Web Apps

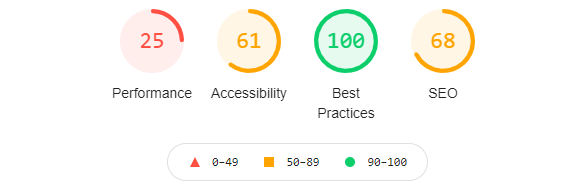

- Speeding Up The Initial Load

- Setting Up A Sitemap

- Installing A Garden Office _ Part 7

- Installing A Garden Office _ Part 6

- Installing A Garden Office _ Part 5

- Lego Saturday

- Installing A Garden Office _ Part 4

- Installing A Garden Office _ Part 3

- Getting Into Blogging

- Installing A Garden Office _ Part 2

- Adding Category Pages

- Job Search 2021 _ The End

- Job Search 2021 _ Working With Recruiters

- Job Search 2021 _ A Live Programming Test

- Installing A Garden Office _ Part 1

- Tagging Posts

- Highlighting Search Terms

- Job Search 2021 _ Another Programming Test _ Feedback

- Adding A Site Search

- Job Search 2021 _ Another Programming Test

- Job Search 2021 _ Choosing The Right Role

- My Mentoring Journey

- Making This Site More Accessible

- Customising The Blazor Loading Page

- Testing This Site

- Job Search 2021 _ Technical Tests

- Secrets Within Blazor WASM

- Why Does It Feel Weird That People Are Reading My Blog

- Getting Twitter To Work

- Being A 5 Year Old

- Its Been A Year

- How I Built This Blog Part 3

- Error When Incorrect Post Uri Used

- Job Search 2021 _ Using Your Friends

- Job Search 2021 _ Where To Look

- Job Search 2021 _ Initial Steps

- How I Built This Blog Part 2

- Being The Executor Of Someones Will

- Why Can I Not Jump Direct To A Blog Post

- Setting Up My Custom Domain

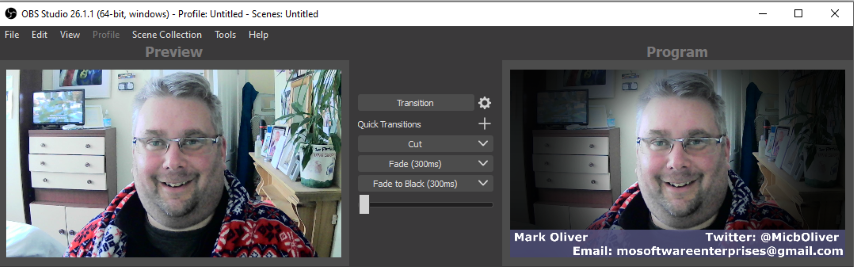

- My OBS Configuration

- How I Built This Blog

This Service Descriptor Is Keyed Your Service Provider May Not Support Keyed Services

I just came across this error without any code changes.

System.InvalidOperationException HResult=0x80131509 Message=This service descriptor is keyed. Your service provider may not support keyed services. Source=Microsoft.Extensions.DependencyInjection.Abstractions StackTrace: at Microsoft.Extensions.DependencyInjection.ServiceDescriptor.ThrowKeyedDescriptor() at Microsoft.Extensions.DependencyInjection.ServiceDescriptor.get_ImplementationInstance() at Microsoft.Extensions.DependencyInjection.ServiceLookup.CallSiteFactory.Populate() at Microsoft.Extensions.DependencyInjection.ServiceLookup.CallSiteFactory..ctor(ICollection`1 descriptors) at Microsoft.Extensions.DependencyInjection.ServiceProvider..ctor(ICollection`1 serviceDescriptors, ServiceProviderOptions options) at Microsoft.Extensions.DependencyInjection.ServiceCollectionContainerBuilderExtensions.BuildServiceProvider(IServiceCollection services, ServiceProviderOptions options) at Microsoft.Extensions.DependencyInjection.ServiceCollectionContainerBuilderExtensions.BuildServiceProvider(IServiceCollection services) at MediaServer.Startup.SetupRateLimitWithRetryStrategyPipeline(IServiceCollection services) in Startup.cs:line 129 at MediaServer.Startup.ConfigureServices(IServiceCollection services) in Startup.cs:line 86 at System.RuntimeMethodHandle.InvokeMethod(Object target, Span`1& arguments, Signature sig, Boolean constructor, Boolean wrapExceptions) at System.Reflection.RuntimeMethodInfo.Invoke(Object obj, BindingFlags invokeAttr, Binder binder, Object[] parameters, CultureInfo culture) at Microsoft.AspNetCore.Hosting.ConfigureServicesBuilder.InvokeCore(Object instance, IServiceCollection services) at Microsoft.AspNetCore.Hosting.ConfigureServicesBuilder.<>c__DisplayClass9_0.<Invoke>g__Startup|0(IServiceCollection serviceCollection) at Microsoft.AspNetCore.Hosting.StartupLoader.ConfigureServicesDelegateBuilder`1.<>c__DisplayClass15_0.<BuildStartupServicesFilterPipeline>g__RunPipeline|0(IServiceCollection services) at Microsoft.AspNetCore.Hosting.ConfigureServicesBuilder.Invoke(Object instance, IServiceCollection services) at Microsoft.AspNetCore.Hosting.ConfigureServicesBuilder.<>c__DisplayClass8_0.<Build>b__0(IServiceCollection services) at Microsoft.AspNetCore.Hosting.StartupLoader.ConfigureServicesDelegateBuilder`1.<>c__DisplayClass14_0.<ConfigureServices>g__ConfigureServicesWithContainerConfiguration|0(IServiceCollection services) at Microsoft.AspNetCore.Hosting.ConventionBasedStartup.ConfigureServices(IServiceCollection services)

😨

Turns out a minor nuget package upgrade caused it (in this case

Polly

v8.0.0

to

v8.3.1)

I found this issue on github which is exactly what I am seeing:

https://github.com/dotnet/runtime/issues/95789

It basically says; something has changed in dotnet DI registrations, and now anything that uses a "Keyed" registration is potentially going to break by throwing this exception.

This is because of a change in v8 of

Microsoft.Extensions.DependencyInjection:

- https://weblogs.asp.net/ricardoperes/net-8-dependency-injection-changes-keyed-services

- https://learn.microsoft.com/en-us/aspnet/core/fundamentals/dependency-injection?view=aspnetcore-8.0#keyed-services

- https://andrewlock.net/exploring-the-dotnet-8-preview-keyed-services-dependency-injection-support/

The only way I solved it was to downgrade

Polly

back to version

8.2.1

This was using a

dotnet6

app.

I don't know what else to do to work around this error, as we are not registering anything in a "Keyed" way, nor are we directly referencing v8 of

Microsoft.Extensions.DependencyInjection. So I am concerned that we are going to be "stuck" at certain version of packages, and eventually be unable to upgrade anything. 😱

Someone please Tweet me if I am wrong!

Make A Wireshark Capture File More Focussed

Wireshark capture files can be massive. So you may want to remove all the unnecessary data from the file before further analysis.

This can be done via the UI, but it is easier to do it via the command line.

Run the following tshark command, from your Wireshark installation directory:

tshark.exe -r <filename of original capture file> -R <display filter> -w <filename of smaller capture file>

-

<filename of original capture file>is the full path to the capture file. -

<filename of smaller capture file>is the full path to the new capture file. -

<display filter>is the filter criteria you wish to use to select the capture packets you want to keep. (These are exactly as you would enter them in the Wireshark UI.)

Examples:

-

"sip or sdp or rtp"- Will filter for only the traffic that is used in VoIP calls -

"ip.addr == 192.168.1.10"- Will filter for only the packets that involved the IP address192.168.1.10 -

"tcp.port eq 25 or icmp"- Will filter to only SMTP (port 25) and ICMP traffic:

This will apply the filters to the file, and only the packets that match the filter will be in the output file.

Forcing Tests To Run In A Specific Order Using MSTest

By default, MSTest will run all tests in alphabetical order based on their name.

This means, you should be able to know when a test will run in relation to another.

For example, if you prefix your tests with TXXX, where XXX is the test number:

T001_test_something T002_test_something_else T003_third_test_to_be_executed

Then these tests will (by default) run

T001

first, followed by

T002,

T003

etc...

Watch out though, the ordering is based on string comparison, so a test named

T010will run beforeT002!

But you can run them in your own order without changing the name, and that is to use the

Priority

attribute

Add this to the top of each

TestMethod

you wish to define an order of e.g.

[TestMethod, Priority(1)] public async Task ATestThatWillRunFirst() { // Arrange // Act // Assert } [TestMethod, Priority(2)] public async Task AnotherTestThatWillRunSecond() { // Arrange // Act // Assert }

I don't recommend this approach, as any test should be atomic, and therefore not matter what order they are run in.

In fact this is vitally important if you are running tests in parallel!

BUT there may be an odd occasion you want them to run in a specific order, such as complex Integration Tests that require significant setup, and so this can be useful.

Upgrading From IdentityServer Version 2 To IdentityServer Version 4

I have recently needed to upgrade from IdentityServer v2 to IdentityServer v4.

This was relatively painless (upgrade via nuget), but I had these 3 issues:

1 - PublicOrigin is no longer exposed, need to add it manually:

//Add in PublicOrigin as it has been removed in v4 of IdentityServer: https://github.com/IdentityServer/IdentityServer4/issues/4535 app.Use(async (ctx, next) => { ctx.SetIdentityServerOrigin("YourRequiredPublicOrigin"); await next(); });

2 - Add in ApiScopes :

.AddInMemoryApiScopes(ApiScopes.GetApiScopes())

Everything worked, except my Postman tests. Weird!

All my C# code was fine, which confused me.

After a lot of searching, I came across this page (English version) by Gabriel Bareclos.

It said:

I changed the content-type of the request from: form-data to: x-www-form-urlencoded .

So in my postman, I changed:

"mode": "formdata", "formdata": [

to this:

"mode": "urlencoded", "urlencoded": [

That was it. That fixed my postman tests!

Note - I should have looked at the docs again, although it is something that might pass you by:

POST /connect/token CONTENT-TYPE application/x-www-form-urlencoded client_id=client1& client_secret=secret& grant_type=authorization_code& code=hdh922& redirect_uri=https://myapp.com/callback

Docker Compose Scaling Gotcha Part 2

Check out my previous gotchas!

Today I ran this command to scaled from 5 existing services, to 10 copies of

service1

docker compose -f config.env up -d --scale service1=10 service1

What do you think happens?

Well, again, not what I was expecting!

I was expecting

service1

to have 10 scaled versions (which it did), but what I was NOT expecting is it restarted the previous running 5 versions!

So what is going on?

When you run a

docker compose

command it looks at the config you have given it with the new command, and compares it to the previous config setup you gave it.

IF the config is different, its default is to restart the existing services to bring them inline with the new configuration.

When you are scaling, that is not necessarily what you want, so you need to be more explicit about what you want it to do.

Fortunately there is a

no-recreate

option we can provide to

compose

that tells it you just want to scale the services, and ignore any changes to config for the existing ones.

This makes the command look like this:

docker compose -f config.env up -d --scale service1=10 --no-recreate service1

I wish Docker would tell you that services are going to restart before doing it, as on a shared instance, you have no way to identify if the config has changed since starting a service.

Oh well another reset of the number of days since I took out PRODUCTION!

Using ObjectDumper Breathed New Life Into A Complex System

For a previous article on Testing in Dotnet, I wrote about the package ObjectDumper.Net

I use this for lots of things, but it is great to add to a test to really see what an object looks like.

So it was obvious for me that I needed to write more about that!

But when I started writing this article (last summer), ChatGPT was everywhere (not that this has changed), so i thought, lets see what it can tell me about ObjectDumper.Net as a start to the article.

Overview From ChatGPT

Prompt:

Tell me why we should use ObjectDumper.Net

ChatGPTs response was:

ObjectDumper.Net is a useful tool for developers working with .NET applications, especially during debugging and data exploration processes. Here are some reasons why you might consider using ObjectDumper.Net:

- Easy Object Visualization: ObjectDumper.Net allows you to visualize the contents of an object in a human-readable format. It can recursively traverse object graphs and present the properties and values of each object in a structured manner. This makes it easier to understand complex object hierarchies and quickly identify the data you're interested in.

- Debugging Aid: When debugging an application, it's often necessary to inspect the state of various objects at runtime. ObjectDumper.Net simplifies this process by providing a convenient way to dump object data to the console or a text file. You can quickly examine the properties and values of objects without having to write custom code to traverse and display them.

- Rapid Prototyping: During the early stages of development, when you're experimenting with different code structures and data models, ObjectDumper.Net can be a handy tool. It allows you to visualize objects and their relationships, making it easier to validate your assumptions and verify that your code is behaving as expected.

- Exploration and Documentation: When working with unfamiliar libraries or APIs, ObjectDumper.Net can assist in exploring the available objects and their properties. By dumping objects to the console or a file, you can study their structure and understand the data they hold. This knowledge can then be used to document the behavior of third-party components or to aid in writing code that interacts with those objects correctly.

- Customization and Extension: ObjectDumper.Net provides various customization options to tailor the output to your specific needs. You can control the depth of object traversal, exclude specific properties, or define custom formatting for property values. This flexibility allows you to adapt ObjectDumper.Net to your preferred output style or integrate it seamlessly into your existing debugging workflows.

- Open Source and Community Support: ObjectDumper.Net is an open-source project, which means it benefits from community contributions and support. If you encounter any issues or have suggestions for improvements, you can engage with the community, report bugs, or even contribute your own enhancements to the project.

Overall, ObjectDumper.Net is a valuable tool for .NET developers that simplifies the process of visualizing and exploring object data. Whether you're debugging, prototyping, or documenting your code, ObjectDumper.Net can save you time and effort by providing an easy-to-use mechanism for examining the contents of objects in a human-readable format.

I was very happy with the response. I found it helpful, and it covered everything I would have said about it.

So let me tell you a bit about how ObjectDumper.Net has helped me to view the internals of a complex application.

Usage Example

I have inherited a software system that integrates with a very complex third party system. It is used to record calls that are happening on a Phone Exchange (PBX).

This system is very complex and must maintain a lot of state when receiving data from the third party application. It requires that state to follow a phone call through the changes within the PBX, and allows the recording software to show useful meta data about the call. e.g.

- Who made the call

- Who received the call

- What call queue it went through

- How long was the call

- What other participants were involved in the call

- Were any keypad buttons pressed during the call

- etc...

Think of it like this: The call recording software is primarily used in call centres...

"This call is being recorded for safety and for training purposes"

... so thousands of calls go through a PBX in a day. They come in. They go out. They get transferred between departments, people & groups of people. They get put on hold. They get sent to voicemail etc...

All of these changes need to be retained to allow the recording to be identified for those "training purposes".

It should be clear now, that the journey of a call can be very complex!

Debugging this system that had no documentation on how it worked, nothing on how it was architected/designed and no automated tests was incredibly hard!

Quickly in using and debugging the system, it became clear that the state of the "recording" was the most important part.

This state data was

dynamic, and had to morph for different recording PBX types.

The complexity and fragility of this system and the fact that it was used in 100s of production environments meant that we needed to tread lightly in the code for fear of breaking things.

We needed a way to log out the contents of the state as it changed, which would allow analysis of a calls journey through the system and its state changes with a few small code changes.

What we needed was to output the state without knowing what it was made of!

In enters ObjectDumper.Net!

If we add a small log message that has the state "dumped" out, we could add a huge amount of context to what was going on:

Logger.Debug($"State of the call at {timestamp}: {call.State.Dump())})

This was a game changer for this system, and the overhead of the "Dump" was minimal for the benefits it gave us (although we could compile it out if we wanted to).

I highly recommend

ObjectDumper.Net

as a quick way to view the contents of an object without retrospectively adding

ToString()

method everywhere (though I highly recommend them too!)

I wrote more about why this was useful for tests in this post Querying Console Output In Your Tests.

I will leave you with a final comment - ObjectDumper.Net will write out the whole contents of an object, and it can be full of unhelpful data, so use it wisely.

Look at the output it is giving, and ideally replace it with more structured logs if you can!

How To Prepare For The First Day Of A New Starter

When someone is joining the company for the first time, there is a lot of preparation required to bring them onboard and make them feel a part of the company and the team.

I have joined companies in the past where I have turned up and had to:

- Clear out the previous occupants desk

- Find a computer and a chair

- Request licenses for the basics e.g. Visual Studio

Also while working remotely, I have also turned up to:

- No access for company network/website.

- No access to my manager for several days and no way to contact anyone else.

Therefore when you are bringing a new starter into the team, this is the list I use to help me board them:

Physical things

- PC

- Monitor(s)

- Headset/Microphone/Speakers

- Webcam

- Notebook & Stationary

- Chair

- Desk

Virtual things

- Software Logins & Licenses e.g. Visual Studio, GitHub, Jira, HR System, MS Office, Email

- An Organisation diagram

- Links to all the relevant documentation - Specifically a team specific "New Starter Guide"

Human things

- Someone to greet them on their first day

- Someone to show them around the premises on their first day

- Someone to introduce them to the team, department, key stakeholders, HR and Site Services

- A consistent point of contact for all questions

- A definitive plan for the first day. One person should be given the job (& time) to ensure that occurs.

- Ensure that there Line manager contacts them as soon as possible. Even remotely or in a different time zone this is a must do.

Onboarding does not stop on Day 1

Then after they turn up on the first day, these additional things will be needed, but not necessarily on that day:

- Communicate frequently with them.

- A Training Plan - To start with, this will be company specific training e.g. How to use the software systems. Long term training plans come later.

Bringing a new person on to your team well, is so important for them to start there career with you. If as a new joiner, you don't feel like a part of the team quickly, you will likely never achieve your full potential, and at worst, leave soon, and waste all the effort the company has put in.

My Thoughts On Using GitHub CoPilot

Recently I enabled GitHub Co-Pilot in Visual Studio.

This was following a talk about AI with Silicon South.

I have heard loads about it, but had not had the urge to use it.

To give this post context, I work on a large existing code base (About 1 Million Lines Of Code) with a unique and bespoke platform, so I didnt feel it would help too much, hence the lack of fervour to try it.

Today I changed that, so here are my random thoughts when using it:

Day 1

Moving code around

Working on fixing some broken tests it offered nothing. Which was no surprise as I was moving around code.

Comments

The first time it fired, it wrote a comment for me that was spot on!

I typed:

//We have received a new snapsh

it said:

//We have received a new snapshot, so we need to update our internal list of users

It's comments are very generic, but they are a good place to start.

Existing Code versus New Code

It likes to create new content, but does not like to add to existing things.

e.g. Will create a unit test for you, but wont fill in a blank method

So this encourages a different way of writing than I am used to. I would normally plan by building out skeleton code and then gradually fill it in.

CoPilot wants to build it immediately!!

To make it start making suggestions within existing code, you need to "prompt" it as if it was chatGPT.

The best way of doing that is to start writing comments or pseudo code, and it then gets a much better idea.

Like a Newbie

It often gives incorrect code, which can be very confusing, as at first glance it looks correct.

It is like working with a person who understands how to code and the classes of the system, but not what it is supposed to do, or how it works. There is no

Domain Knowledge.

Day 1 Conclusion

After using it for a day, it saved me a small amount of work.

I look forward to using it on newer things to see its power, but right now, it wont be cost effective for legacy code maintenance.

Day 2

I spent the day adding new tests to existing complex code, that has no current tests.

I found that it is good at guessing, but it gets it wrong a LOT.

This actually slowed my flow down, as I kept having to look at what it was suggesting, and confirm if it was correct, and actively discarding it before writing what I needed.

Again, it felt like someone was really trying to help, but butting in with not thought out suggestions.

Just to be clear - if I was pair/mob programming with a real person, this is the kind of thing I would want to see, as it gives me an opportunity to help them learn.

BUT Copilot is a tool, and it is here to help ME, and not for ME to teach IT. So it comes off as annoying!!

The best thing it did, was save me typing when generating test data constructs though.

Final Conclusion

My biggest problem is it suggests things that are clearly (well to me) wrong.

This was mostly when suggesting parameters that are completely different types.

This is very annoying as it breaks concentration for me, because I am having to solve a problem that would not be introduced normally.

I liken this to a small child asking you questions while you are typing (Why is the sky blue? What is your favourite Carrot? What’s faster? Fire or dust?), sometimes you just end up typing what they are saying and have to redo it all again.

I am still open minded about Co-Pilot, ChatGPT and all the other AI tooling being developed, but for my current company project, it is not worth the cost to me, both financially and with my time.

50 Ways To Retain An Employee

My friend Hira suggested I write a post detailing 50 ways to retain an employee. This is all I have right now:

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

- Give them expensive lego sets every month

- Free Krispy Kreme Donuts on Friday

Thanks Hira. This is my favourite post 😃

Recording Video From A Nintendo Switch

My son has decided he would like to have a YouTube channel showing him playing games on his Nintendo Switch.

He sees lots of YouTubers playing games on YouTube, so assumes it is something easily done.

So this is my exploration ( as an awesome parent 😃 ) into how to make that happen.

I have some experience with OBS, FFMPEG and recording Microsoft Teams, so in the words of Jeremy Clarkson "How hard can it be?"

My first stop is Google, which comes up with a recording feature in some Switch games, but that wont work for what we want.

We want to be able to record the whole session of gameplay, and then turn that into a YouTube video, this is for short replays really.

My first thought was a Video Capture device that would link to my PC, which as it turns out is the 2nd option touted in this video: https://www.youtube.com/watch?v=zmdeOuUW5_8

They also suggest using a camera to directly record the consoles screen, which is not good enough for us.

Everything I looked at always suggested a Video Capture device, and based on several YouTube videos, suggest that for the most part even the cheapest ones are ok.

So with that in mind, I decided to go a step above the cheapest and get a brand I had heard of before. I went with the Mirabox USB 3.0 4K Pass-Through Capture Card for £30 https://www.amazon.co.uk/dp/B091YCHYP3?psc=1&ref=ppx_yo2ov_dt_b_product_details

It is just a small box with a USB cable and 2 HDMI Ports. You plug the Nintendo Switch base station into the

Input, you connect a HDMI cable from the

Output

back to the TV, and you plug the USB into a PC to record the video on.

Simples! ?????

It works like a charm, my son can play on his Switch on the TV as normal, and we can record his gameplay on a computer in the corner.

This Box will work for anything with a HDMI cable output that you would normally plug into the TV, so opens a world of possibilities!

Caching The Contents Of This Site

This site is all stored statically (to make it completely free to host in Azure Static Web Apps), but it is generated dynamically using Blazor webassembly!

Find out more how I built this blog here

So because of that, it needs to be fast to run, and more importantly to download (webassembly means it requires the dotnet runtime downloaded, as well as the code for the site).

Once the Blazor code (dotnet runtime + site code) is downloaded, this site is all run in memory, with no further server access (ignoring assets).

So how does that work for the blog posts?

The posts themselves are markdown text files. This makes them very small, and easy to keep cached in memory.

That means, whenever we access a blog post, it's full contents are kept in RAM.

However we don't want to read all the posts if we don't have to. Therefore we access the posts markdown only ONCE no matter how many times it is accessed by the user (assuming they don't navigate away from the blog).

However there are times we need to have All the posts. This is the case when we do a search or view all blog posts.

For example, when we search, we search the title and the contents of the posts. Which means we need them all in memory to do that.

This may not be sustainable in the long term as the number of posts grow, but for a few hundred it should be fine (famous last words?).

Some quick calculations show that with the current 73 posts, they fit in 270KB (I don't keep the rendered view just the raw text), which is smaller than a lot of images floating round the web.

Adding More Disk Space To Azure DevOps Agents

Out of the box, Azure DevOps Agents have very limited disk space in which to manage your source code.

All of these machines have at least 10 GB of free disk space available for your pipelines to run. This free space is consumed when your pipeline checks out source code, downloads packages, pulls docker images, or generates intermediate files.

For a very large Visual Studio solution (over 450 projects), this meant, we have run out of disk space!

Based on our analysis, we are building over 7GB of data during solution build alone, so with only 10GB to play with, running tests, building installers etc means we do not have enough space.

Unfortunately Azure DevOps does not provide a way of running a MS Hosted Agent with a custom disk size, so that left us with the only option to have a self-hosted setup.

This means setting up your own machine (build agent) to do the Solution Build etc on.

Microsoft give us the instructions to do this, and suggest using Virtual Machine (VM) Scale Sets to build multiple streams in parallel.

The process we need to follow is:

- Create a VM

- Install the build software on that VM

- Generate an Image of that VM

- Setup a VM Scale Set using that Image

- Tell DevOps to use the VM Scale Set

So this is how we did that:

Create VM

On your machine:

Find the right subscription to create the VM scale set etc on:

az account list -o table

az account set -s <your subscription ID>

Then start the VM image creation

az group create --location <azure reqion required e.g. westus> --name <group name>

List of available regions here: https://azure.microsoft.com/en-gb/explore/global-infrastructure/products-by-region/?products=virtual-machine-scale-sets

az vm create --name <VM Name> --resource-group <group name> --image <Image To Use> --admin-username <user name> --public-ip-sku Standard

Note "Image To Use" should be one of these: https://learn.microsoft.com/en-us/azure/virtual-machines/windows/cli-ps-findimage#default-images

Install the build software on that VM

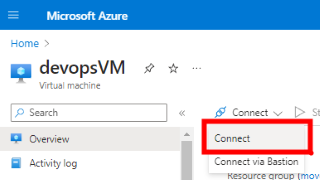

Now Connect (RDP) into the VM created.

(via the Azure Portal "Connect" option next to the VM)

Install the software you need to build your system, such as Visual Studio Build tools, Azure Powershell etc.

Note - The Azure Build agent software will automatically install later, but you still need the build tools you rely on. Some of this can be done via your Azure DevOps pipeline, but for things like Visual Studio then these need to be pre-installed.

e.g. All MS Hosted build agents have this software pre-installed, so you may need to install some of it yourself if you are not explicitly installing it in your pipelines: https://github.com/actions/runner-images

Reboot the VM to ensure all installations are completed.

Generate an Image of that VM

Connect back into the VM, and from an administrator Console Window / Command Prompt, run:

C:\Windows\System32\sysprep\sysprep.exe /generalize /oobe /shutdown

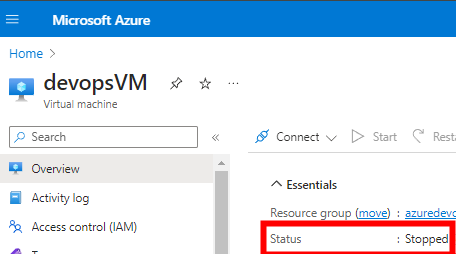

Wait for the VM to finish generalization and shutdown (in Azure you need it to say “STOPPED”). Do not proceed until the VM has stopped.

Then on your machine, run the following:

Deallocate the VM in Azure (stops you being charged for it)

az vm deallocate --resource-group <group name> --name <VM Name>

Tell Azure the VM has been generalised ready for imaging:

az vm generalize --resource-group <group name> --name <VM Name>

Create the VM Image from the generalised VM:

az image create --resource-group <group name> --name <VM Name Image> --source <VM Name>

Setup a VM Scale Set using that Image

And finally create the scale set using the image created:

az vmss create --name <group name>pool --resource-group <group name> --image <VM Name Image> --vm-sku Standard_D2_v4 --storage-sku StandardSSD_LRS --instance-count 2 --disable-overprovision --upgrade-policy-mode manual --single-placement-group false --platform-fault-domain-count 1 --load-balancer '""' --orchestration-mode Uniform --admin-username <user name> --public-ip-per-vm

Note: this VM has a public IP so you can RDP into it. You may also want to tweak some of the hardware characteristics dependant on your needs

Tell DevOps to use the VM Scale Set

Setup the Agent Pool as per these instructions: https://learn.microsoft.com/en-us/azure/devops/pipelines/agents/scale-set-agents?view=azure-devops#create-the-scale-set-agent-pool

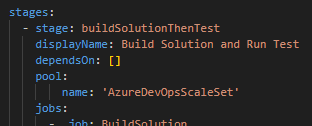

Then adjust your pipeline to use it. For a yaml file, you would add it in the

pool

section of your stage:

You are done

I found that to get Azure Devops to recognise and use the new scale set, you need to WAIT. It takes AGES to recognise and use the new set (in excess of an hour for me).

You will also need to start a pipeline run that uses the scale set to make it do things too.

Making changes to the VM Image

Run the steps in "Cleanup" to remove the existing scale set and the generalised VM.

Then create a new VM using your custom image:

az vm create --name <VM Name> --resource-group <group name> --image <VM Name Image> --admin-username <user name> --public-ip-sku Standard

Install the additional software, and make any other VM changes you need.

Then follow the steps for "Generate an Image of that VM" and "Setup a VM Scale Set using that Image"

Cleanup

To Cleanup if you need to run it again, run these:

Delete the scale set

az vmss delete --name <group name>pool --resource-group <group name>

Delete the VM

az vm delete --name <VM Name> --resource-group <group name>

Four Years Have Gone

D,

The last year I have tried to move on, to see more people, to do more things, to reconnect with myself, to reconnect with our family, to live my life.

It has meant I needed to let go of some of that crippling grief.

I still miss you every day, but I feel you all the time... in the small things: Seeing an odd pair of socks, a crazy pair of shoes, a stupid crude joke, the music you listened to and the movies we loved and most of all in the joy in our kids faces.

I never thought I would have to go through life without you, I expected you to be there for it all.

4 years have gone by, and I have felt every day of that time, the grief has often swallowed me whole, but I have pushed through it, and I am here.

Love you always, M.

Querying Console Output In Your Tests

Hello, and welcome to my blog post for December 16th of the C# Advent Calendar 2023.

Organised by Matthew Groves.

I wont tell you about me, but if you are interested, check out my About me page.

Last year in one of the C# advent calendars, I wrote about Testing in Dotnet.

I find that Unit Testing is a topic that is not discussed as often as needed, and considering that article has had over 2.1k views, I decided that it made sense to write about testing once again.

This time I am going to talk about how I tested a black box system that despite having the code available, could not be refactored to be easier to test with unit tests.

The system in question was a telecomms messaging parser, and was a large and complicated beast. It had no documentation, and none of the implementing software engineers were still with the business.

So inevitably, it had no unit tests either 😱 ! However, it was not completely bereft of tests, it did have some higher level integration style tests.

These tests took a network capture (See Wireshark ), and ran it through the parser, and confirmed that the emissions of the parser were what was expected (Think of it like snapshot testing)

This in theory is a very sound testing strategy for this kind of setup, however the emissions of the parser were very generic, and did not indicate if certain conditions had been met. 😢

What this meant is that over time, the system changed internally and certain code paths were not being executed any more, but due to the state of the final emission it was assumed everything was correct.

Due to the nature of the input, and the system, the existence of the final emission was not enough to prove that the system functioned correctly, and many edge cases were not working.

Therefore, without a major rewrite, how do we confirm that the system is following the correct path?

Well one thing it did well was it logged its internals constantly, which made the job of analysing the issues much easier for the service desk team to help the customers.

But as a software engineer I thought, "Can we use those logs as part of the validation of the system in the automated tests?"

Turns out the answer is Yes!. Dotnet is a very flexible framework, and you can pretty much override anything if you know the right magical incantation.

The first thing was to determine how the system is doing its extensive logging.

This turned out to be the

log4net

logging platform with a bespoke format, and some bespoke handlers added.

Now we know that, we "could" have our tests read the log files directly and parse them for the required items!

This was a great idea, but did not give the feedback we wanted in the unit tests. We would get a test failure, and then have to find the logs and determine why.

So instead, can we redirect those logs to the MSTest Console Output?

That way when we get a failure, we have all the information we need to diagnose the problem further (vital when running external devops agents!)

Turns out this is easy to do with log4net, we can use a ConsoleAppender.

So adding this to our `log4net`` config specifically in the unit tests, gives us the internals of the system redirected to the Console:

<appender name="Console" type="log4net.Appender.ConsoleAppender"> <layout type="log4net.Layout.PatternLayout"> <conversionPattern value="%date %-5level: %message%newline" /> </layout> </appender>

That is useful for sure, we now have all the info in the same place in a failure of the test.

However can we make the tests more useful?

Can they analyse the output in the Console and identify the problems themselves?

Again, yes! Dotnet to the rescue!

We can override the Console output destination to something of our choosing. For us that was a StringBuilder:

public static (TextWriter originalConsoleOut, StringBuilder consoleOutput) CaptureConsoleOut() { //Capture all the Console.Write output to a StringBuilder StringBuilder consoleOutput = new StringBuilder(); TextWriter originalConsoleOut = Console.Out; Console.SetOut(new StringWriter(consoleOutput)); consoleOutput.Clear(); return (originalConsoleOut, consoleOutput); }

If we add this code, to our

TestInitialize

method, then all tests can now get access to the console output.

We can do that by defining the TestContext property, that MSTest will then automatically handle and populate.

This is then available in all parts of the test lifecycle. So in the initialise, we are storing the StringBuilder that will contain the Console output and the original TextWriter used as the Console Output (so we can reinstate it later) as a

Tuple

within the

TestContext

Properties

Dictionary.

public TestContext TestContext{ get; set; } [TestInitialize] public void TestInitialize() { TestContext.Properties["consoleOutInfo"] = CaptureConsoleOut(); }

We are nearly there now. But we have captured all the Console output, so it is no longer displayed in the TestRunner, therefore at the end of the test, we need to force the text intercepted back to the real console, which we will do in the

TestCleanup

method:

[TestCleanup] public void TestCleanup() { var consoleOutInfo = ((System.IO.TextWriter originalConsoleOut, System.Text.StringBuilder consoleOutput))TestContext.Properties["consoleOutInfo"]; //Reset the Console output back to the original output stream Console.SetOut(consoleOutInfo.originalConsoleOut); //Push the captured data to the original outputstream Console.WriteLine(consoleOutput.ToString()); }

So now in our tests, we can query the

consoleOutput

StringBuilder's contents at any time in the test, and

Assert

what the black box system is doing e.g.

var consoleOutInfo = ((System.IO.TextWriter _, System.Text.StringBuilder consoleOutput))TestContext.Properties["consoleOutInfo"]; Assert.IsTrue(consoleOutInfo.consoleOutput.ToString().Contains("COMPRESSING MESSAGE"), "Did not compress the message");

This was a game changer for this type of system, as the end emission being as the stored snapshot was hiding that other parts of the system were not being activated due to missing internal emissions that could not be captured from the Black box.

We can now add additional tests that confirm the path through the code was as expected for each scenario by adding a few simple

Asserts based on the logging.

An Afterthought

Some of you may be thinking that this is a very crude approach, and our own implemented

TextWriter

would be a better choice, and you may be correct, but this was very quick to put in place, so

KISS

&

YAGNI

kicked in.

As often in software, "simple is best", and for the effort required to implement this, its benefit was massive.

In an ideal world, we would have a more observable system, but sometimes you just have to work with what you have.

If you got this far through my C# Advent Calendar 2023 article, then thanks a lot, I appreciate your time.

Come say Hi on Twitter

Happy holidays everyone

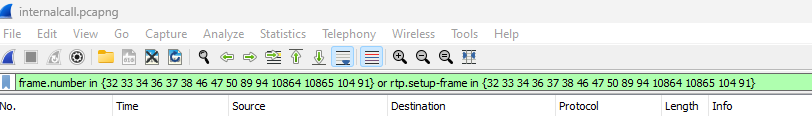

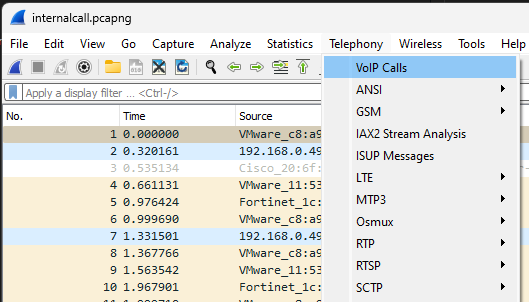

Filtering A Pcap VoIP Recording To A Specific Call In Wireshark

Open your Pcap file in Wireshark.

On the menu, choose

Telephony->VoIP Calls

calls

Then find the call you want in the list, and select it. (Look for an

INVITE 200

in the

Comments

section, and a

From

that has a phone number in it).

Once selected, press

Prepare Filter

button.

This will change the main screen of Wireshark to have a filter like:

Then on the main Wireshark window, select

File->Export Specified Packets, and then on the following dialog, choose

All packets

&

Displayed

Enter a filename and a location, and hit Save.

Then you can open that new file in Wireshark and it will only contain the packets for that single call.

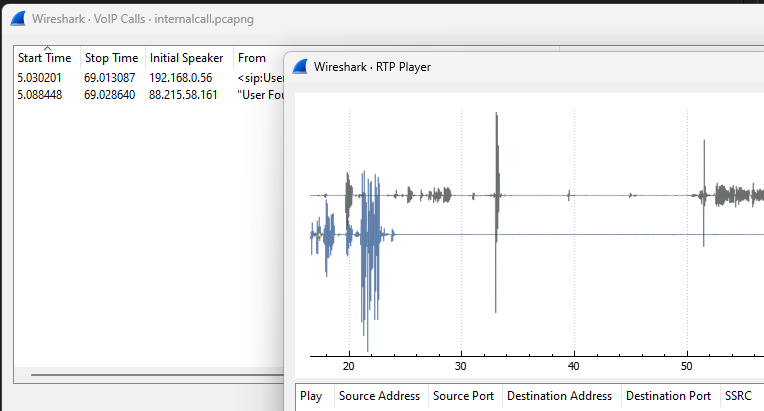

Playing A Captured VoIP Recording Through Wireshark

If you have a Pcap file network capture (generated by Wireshark perhaps) with a SIP or any Voice Over IP (VoIP) call traffic, you can listen to the raw calls within the Wireshark.

Open the pcap file in Wireshark.

On the menu, Choose Telephony->VoIP Calls

From the resulting window, choose a call leg to start listening too.

Press “Play Streams” button, and you will get the RTP player (when it has loaded - depends how much is in the recording how long this takes), press the play button.

You should hear the audio of the calls.

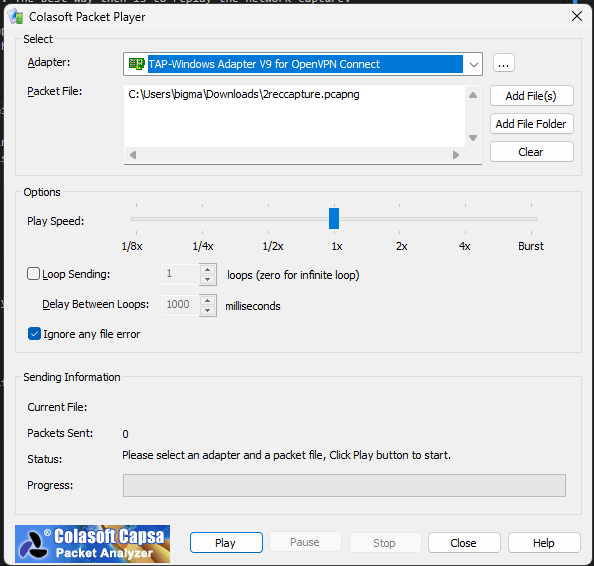

Replaying Network Captures

When building any network related software, sometimes you need to capture the network traffic to debug an issue. The tool of choice tends to be Wireshark, which is truly excellent and I have used it for many years debugging TELNET, HTTP, SIP, UDP & RTP traffic.

However when you have a network capture, and determined the solution to your problem, you now need want to prove the problem is solved, and the best way to do that is to generate the network traffic again. However what if the system outputting the traffic is a closed system miles away. The best way then is to replay the network capture.

Wireshark its self does not support replaying the captures, but there are other tools out there. One of which is a piece of software called Colasoft Packet Player which you can get from here:

https://www.colasoft.com/packet_player/

It is a very simple tool:

Once started, you select which network adapter to send the traffic over andthe network capture file to replay.

Then Click 'Play' and the packets will then be sent over your chosen network card!

NOTE: The packets are sent in real time which means that if you have a 12 hour capture, it will take 12 hours to send all the packets out. There are play speed options to adjust this if your software will cope with that.

Explicitly Including A DLL In A Project

Have you ever seen this kind of error on running your code:

System.IO.FileNotFoundException: Could not load file or assembly 'XXXXXXXXXXXXXXX, Version=X.Y.Z.0, Culture=neutral, PublicKeyToken=yyyyyyyy'. The system cannot find the file specified. File name: 'XXXXXXXXXXXXXXX, Version=X.Y.Z.0, Culture=neutral, PublicKeyToken=yyyyyyyy'

This is easily fixed, by adding the missing package/DLL into your project, and rebuilding.

However what happens when someone does a "remove all unused references" on that project?

Well the DLL reference gets removed, which gives you back the error again, and is only detectable by running your app, so unless you have tests that invoke this behaviour, then you have a sneaky bug.

Note - Most likely you need an Integration test to find this kind of error, as it is a problem with what DLLs are available at runtime for your app, and unit tests may include that DLL in some other way.

To avoid this from happening to you in the future: when you include the pacakage/dll in your project, you need to explicitly reference the DLL in your code.

To make this clear for future developers, I prefer to add a specific class into my code that is responsible for this. It looks something like this:

internal static class MissingDllHack { private static <Type within the assembly> youNeedMe; }

an example that is forcing System.ValueTuple to be included in your project output folder:

/// <summary>The missing dll hack.</summary> [UsedImplicitly] internal static class MissingDllHack { /// <summary> Must reference a type in System.ValueTuple so that this dll will be /// included in the output folder of referencing projects without requiring a direct /// dependency on it. See http://stackoverflow.com/a/22315164/1141360.</summary> #pragma warning disable 169 private static ValueTuple youNeedMe; #pragma warning restore 169 }

Now the DLL is a compile time problem, so if it is not included, you wont be able to compile your code.

Hosting A Large Number Of Participants In Microsoft Teams

Something I learned about today, was the different ways to host people in MS Teams.

1 on 1 calls are great, but sometimes you want to talk to a large group. Normally I reach straight for a "Meeting" in Teams when I want to talk to a bigger number of people.

But if you are talking to a whole department or large team, this can be very distracting as Microphones and Cameras come on and off.

Teams has another way of dealing with this scenario, and it is called a "Town Hall".

This is a great way to present to a large number of people, as well as getting feedback in the form of a Q&A session. Think of it as a Live Stream like on YouTube or Twitch.

Take a look and see if it could be good for you: https://support.microsoft.com/en-us/office/get-started-with-town-hall-in-microsoft-teams-33baf0c6-0283-4c15-9617-3013e8d4804f

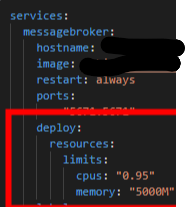

Dynamically Changing Docker Container Limits

We have limits set on our docker containers to limit things like CPU usage, and memory usage.

These are defined in docker compose configuration YAML files, and are set at runtime.

For instance, this setting sets the CPU to only be 95% of the available CPUs, and limit the Memory to 5000MB

This can be changed dynamically without the container being restarted using the docker update command:

https://docs.docker.com/engine/reference/commandline/container_update/

So if we run

docker update -m 700M test-messagebroker-1

This will change the memory limit from 5000M to 700M without the container being restarted.

Note this is a temporary change until the container is restarted, as the compose file will always take precedence on startup.

Docker Compose Parallel Gotcha

Time for another Gotcha with Docker.

This time, we kept seeing calls in Azure DevOps to

docker compose up

that would never complete. It would start some of the services (We have about 40), and then it would just hang.

We have recently upgraded to Docker Compose V2 due to V1 being retired.

We did not see this issue on our local machines, only on DevOps.

The only thing that was different (apart from

docker-compose

changing to

docker compose

in our scripts) is that we noticed during a local build (docker compose build) of our containers, that we would swamp our PCs and make them unusable during the build process, this meant we added this directive to our environments:

COMPOSE_PARALLEL_LIMIT=2

This solved the issue, and we thought nothing of it. Several weeks later, this issue occurs.

Lots of researching of this docker compose hanging, lead to this issue about lacking "entropy" on a build agent:

https://stackoverflow.com/questions/59941911/docker-compose-up-hangs-forever-how-to-debug

https://akliang.medium.com/docker-compose-and-entropy-5b3f00561b88

None of the suggestions there helped.

Then I noticed that if we run a

docker compose up

with a specific container, it never hangs.

This led me to this GitHub issue:

https://github.com/docker/compose/issues/7486

And again the

COMPOSE_PARALLEL_LIMIT

being a factor.

DAMMIT!!

So we see that having it too low, stops

compose

from being able to start (note that this is not consistent, sometimes it works fine 5 or 6 times in a row)

So we set it to 200 in our env file:

COMPOSE_PARALLEL_LIMIT=200

and now it all works fine!

I will add an additional .env file with an override for a local build to help the developers, but for now devops is working.

Intermittent Playwright Exception

I have been getting this error intermittently in our

Azure Devops

based

Playwright

tests using a headless Chrome browser:

Microsoft.Playwright.PlaywrightException: net::ERR_NETWORK_CHANGED Stack Trace: at Microsoft.Playwright.Transport.Connection.InnerSendMessageToServerAsync[T](String guid, String method, Dictionary`2 dictionary) in /_/src/Playwright/Transport/Connection.cs:line 165 at Microsoft.Playwright.Transport.Connection.WrapApiCallAsync[T](Func`1 action, Boolean isInternal) in /_/src/Playwright/Transport/Connection.cs:line 471 at Microsoft.Playwright.Core.Page.ReloadAsync(PageReloadOptions options) in /_/src/Playwright/Core/Page.cs:line 684

We have been unable to figure out the problem, but after another round of googling, I came across this stackoverflow answer:

"The underlying issue one of my docker-compose setups having restart=always. Unfortunatly I had a bug causing a container to terminate and restart. This restart caused the network change. It is determinable by running docker ps and noticing the container restarted."

The problem is:

- The UI that is being tested is running in Docker!

- The systems services are run in Docker via a

composefile! - This is a test environment!

- Some of the containers in our deployment are not fully configured!

- They are constantly restarting!

This is what is causing

Docker

to reset the network, and thus this error we are seeing.

An easy fix was to kill the bouncing Docker containers as they are not needed.

Problem solved (well its not consistent enough to know for sure, but Tweet me in a month and I will be able to confirm or not.)

Its Time To Talk About Lego

I am a big fan of lego, which is a fairly new thing for me, I didn't have any as a kid.

However as an adult with disposable income, I get lots of pleasure from Lego. It is a great stress and anxiety reliever for me.

My "Want to Buy list" at the moment contains:

https://www.lego.com/en-gb/product/motorised-lighthouse-21335

https://www.lego.com/en-gb/product/atari-2600-10306

https://www.lego.com/en-gb/product/the-friends-apartments-10292

My top 3 favourite sets that I actually purchased in 2022 were:

https://www.lego.com/en-gb/product/nintendo-entertainment-system-71374

https://www.lego.com/en-gb/product/ghostbusters-ecto-1-10274

https://www.lego.com/en-gb/product/optimus-prime-10302

These are a couple of the less expensive sets (under £100), that I think are cool:

https://www.lego.com/en-gb/product/horizon-forbidden-west-tallneck-76989

https://www.lego.com/en-us/product/material-handler-42144

You might find some interesting deals here:

https://www.brickwatch.net/en-GB/legodeals/1/best-deals

I can talk about lego for ages!

Last one (I promise). This is beautiful (and on my wall):

https://www.lego.com/en-gb/product/vincent-van-gogh-the-starry-night-21333

Docker Compose Scaling Gotcha

Today I ran this command:

docker-compose up -d --scale service1=10

Then ran this command:

docker-compose up -d --scale anotherservice2=10

What do you think happens?

Well not what I was expecting.

I was expecting

service1

to have 10 scaled versions, and

anotherservice2

to have 10 scaled versions.

What I got was

service1

was scaled back down to the default in the compose file (in this case 5), and

anotherservice2

was bought up to 10.

Why does this happen? Would this have worked?

docker-compose up -d --scale anotherservice2=10 anotherservice2

Turns out YES!

So what is going on?

Lets break the command down...

-

docker-compose up -dsays bring the containers in the compose file up and detach from them. -

--scale anotherservice2=10says we want to ignore the scale options in the compose file foranotherservice2and have 10 of them instead. -

anotherservice2says only apply the previous options to theanotherservice2

I can understand why, now I look at the command, but I would have preferred an indication that it would take

service1

replicas down too.

Oh well another reset of the number of days since I took out PRODUCTION!

Three Years Have Gone

D,

3 years have gone by, and it feels like yesterday. So much has happened, yet so little too.

Families have wained, friendships needed rekindling, and through it all there has been that feeling of emptiness and loss.

3 years without a part of me, 3 years without my whole heart, 3 years without my brother, 3 years trying to find my way back to being "me".

Every day, you have been there, watching me, reminding me. Every day you have been so far away. Every day you have been so utterly missed.

I ask myself, why did this happen, but I realise, there is no rhyme or reason; only "life". You lived your life with joy and abandon, with fun and laughter, with a sense of giving and openness and you loved freely too.

The one thing that will always make me sad is watching our kids have so much fun together and you not being here to share.

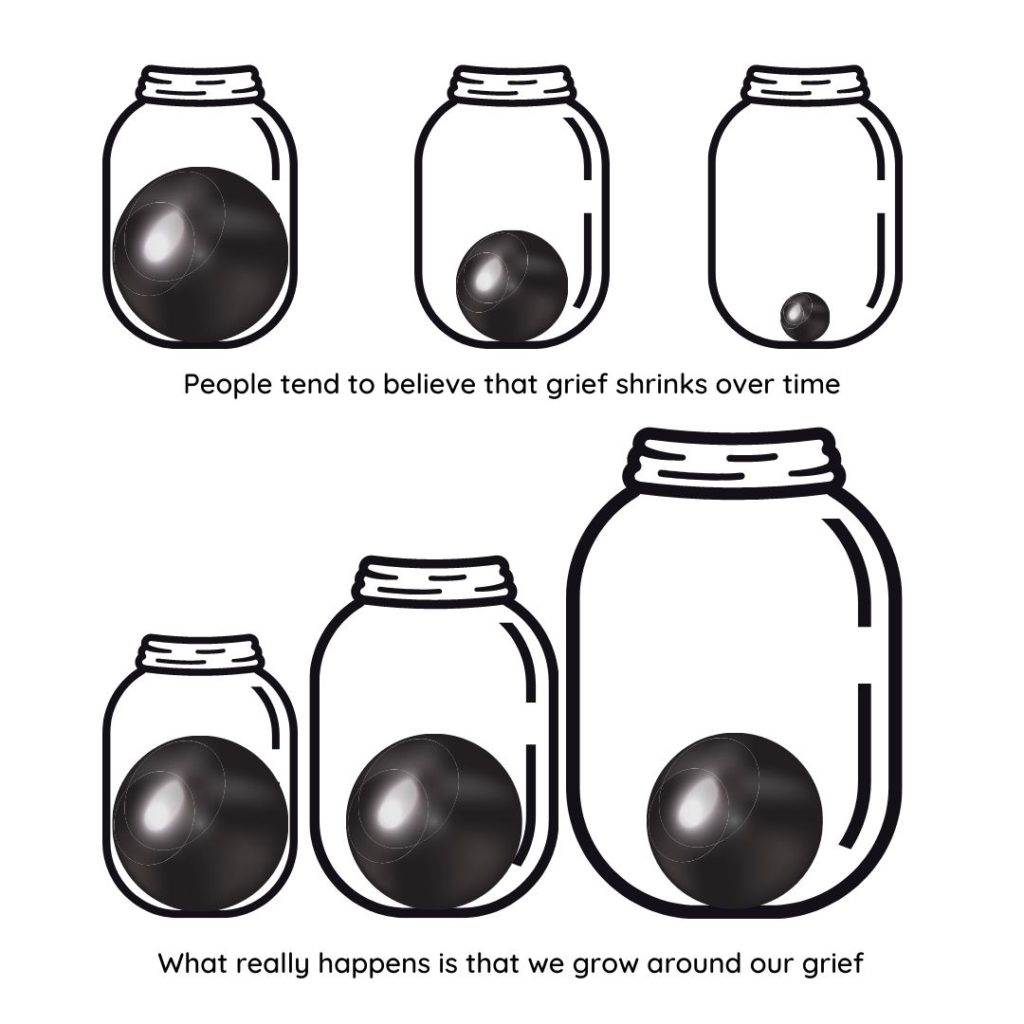

My heart still hurts you are not here, and the grief does not lessen, but I have grown around it, tamed it and smothered its screaming noise with the love and memories of us.

Love, M.

Testing In Dotnet

Hello, and welcome to my blog post for December 15th of the .NET Advent Calendar 2022.

Organised by Dustin Morris Gorski on Twitter.

I wont tell you about me, but if you are interested, check out my About me page.

Testing in Dotnet

My view is that we don't talk enough about Testing in the Software Development world!

I have been working in the industry for a long time, and have seen the benefit of testing, not just in creating stability in the software, but speed of development, confidence of the engineers, confidence of the stake holders, less downtime, less out of hours support required, the list goes on.

So I thought it would be a good idea to talk about how "I" do Testing with Dotnet.

I am talking about automated testing here in the general sense. I am not going to get into a flame war of the difference between unit tests, integration tests, E2E tests, It is nearly the holidays after all 😃

I should say, that this is very much an opinion piece. However I hope you will find something useful within it, and it will give you the passion to start (or continue) on your testing journey in software development.

What tooling

I use Visual Studio 2022 Professional for dotnet development, running on a Windows machine, building with dotnet 6 (at time of writing).

But nothing I am about to say is specific to those things (unless stated). Everything I am about tell you is platform and development environment agnostic, after all Dotnet is very much multi platform these days and every test I write for this blog or the Company I currently work for runs on Linux but is developed in Windows.

What extensions

Within VS, I use the following extensions to help me write tests:

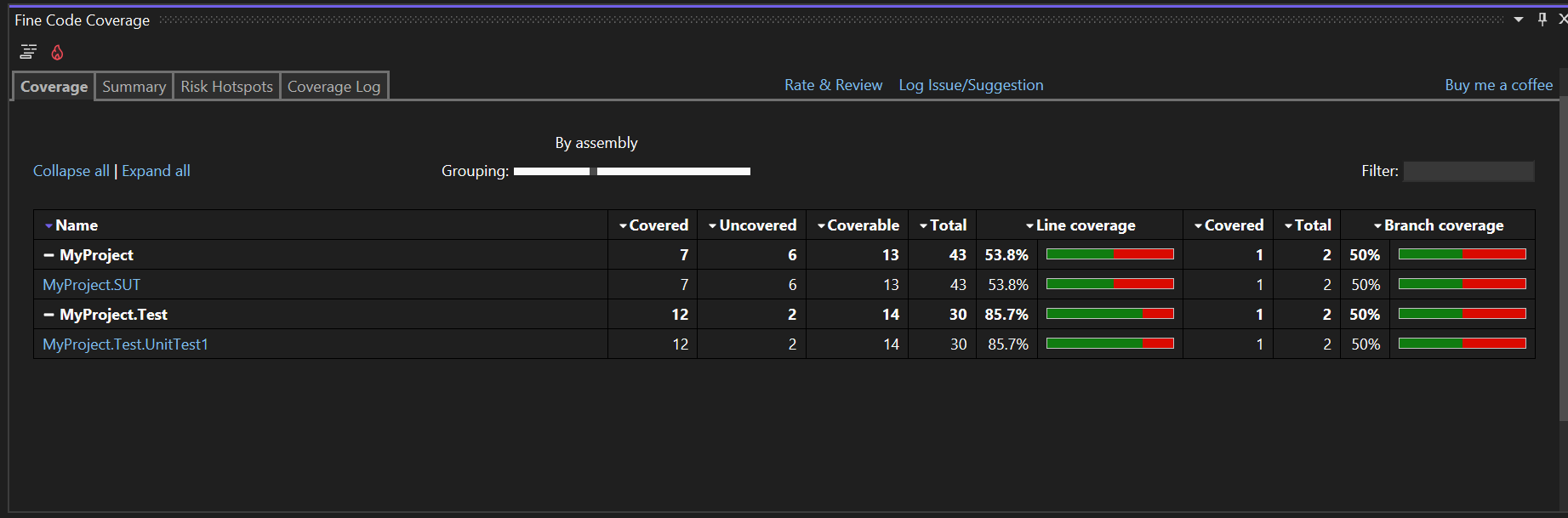

Fine Code Coverage

Created by Fortune Ngwenya

This extension (FCC) is really cool, it can independently show you code coverage, (or with the latest version use the inbuilt coverlet app in VS) to generate a table of code coverage and indicate in the code what paths you have missed.

It can also highlight Risk Hotspots too, identifying complex areas of your code, that could be a focus for a refactoring effort.

I find this tool invaluable, so do check it out.

Please note, code coverage is a metric I find useful but not essential, it helps me discover tests I have not written, and in some cases, identify that a test is not following the path I would expect it to (useful when retrofitting tests to legacy code).

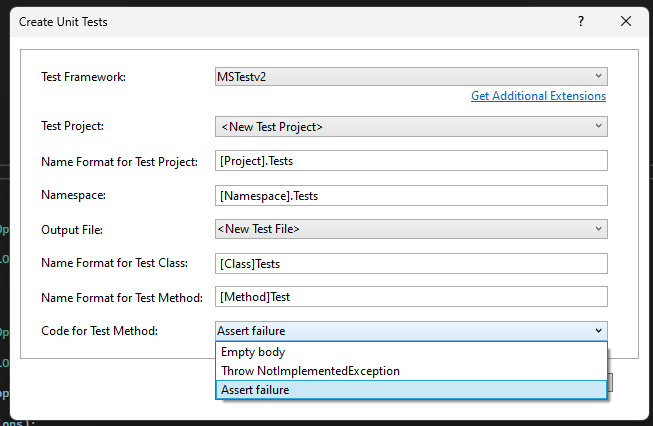

Create Unit Tests Command

(This used to be an extension, but since VS 2019, it is now built in)

This tool allows you to create unit test projects and blank test methods. I find it useful to quickly put the infrastructure in place to allow me to start testing.

However I do end up automatically updating the dotnet version & nuget packages as they are rarely current.

What it gives you then is a

TestClass

with a bunch of

TestMethods. Each test method is intended to exercise each public method of the class it is creating unit tests of.

Unfortunately its usefulness is limited to that, I wish it did more, but "every little helps" to quote a supermarket.

What packages

This is a list of a useful nuget packages:

JustMock Lite

JustMock Lite is an great mocking tool, it gives you just enough functionality to be useful, but not too much that you don't notice the design flaws in your code. See below on "What can it mean if it is hard to write a test?"

System.IO.Abstractions

Combined with Dependency Injection & JustMock Lite, this allows us to abstract away things in

System.IO, such as the

FileSystem. Really handy so you dont have to read and write real data to the disk for your test.

DeepEqual

Allows you to compare the contents of objects with ease.

ObjectDumper.Net

I use this for lots of things, but it is great to add to a test to really see what an object looks like. A good companion with DeepEqual.

MockHttp

This is a new package for me, but BOY do I wish I knew about it sooner. It allows you to fake http responses.

How to start testing

I am going to talk about 2 scenarios: working with code that already exists, and code that does not.

For code that exists already, we will focus on adding tests to the existing structure, so we are focussed on testing methods in classes.

For code that does not exist, then I encourage you to look up the technique of Test Driven Development (TDD).

This means you will write the test before the code exists, and often that can mean you are writing tests at a much higher level. e.g. testing of a feature, rather than a class.

Testing code that already exists

I will create a new unit test project called

projectname.Tests

(using the tool above)

This project will sit next to the project with the code I want to test.

I will focus on testing the public interface of the class, and try to drive the code through the paths of the code I want to test (likely because I want to change it).

If I know enough about the code, I will write the test before any code changes.

This test will prove the existing functionality works as we currently see it.

Then I will write a test that will fail because the code I need to write/change does not exist yet.

Now I have 2 tests, one to prove I have not broken anything unintentionally, and one to prove when I have made the change I need to do.

Note - This is a simplistic example, we may want to write many tests to prove it currently works, and many to prove it will work. Don't limit your testing unnecessarily. This is where FCC comes in handy, as it allows you to identify areas that are not currently exercised by tests, and tell us when we are not executing branches of our code.

As a side note, I often use tests to learn about what the existing code does - exploratory tests. These normally build up as I learn the code and its complexity, until I understand enough to write a test we can keep, or throw it away (but transfer that knowledge into another written form on the internal WIKI).

Testing code that does not exist

We still need to create a new unit project, but this may be named after a feature, rather than a code project, especially if you are going to try TDD.

However you still need a good name and location for the test project.

Then I would create a blank

TestClass

with a

TestMethod

to write the test I need to.

Method name conventions

There are lots of ways to name your test methods, I subscribe to this one from Roy Osherove

This has the method name laid out as so:

UnitOfWork_StateUnderTest_ExpectedBehavior

e.g.

MyAmazingMethod_InputParametersAreAllBadValues_FalseResult()

MyAmazingNewFeature_HappyPathOne_CustomerCompletesTransaction()

Read the article for the details, I don't want to make this article even longer.

The thing to remember though, is to make it clear what the test is trying to do (it is always about readability in software dev!), and be consistent across your code base.

Think about what your CI platform shows you on a test failure, are you getting enough information from it to diagnose the issue. Quite often all you get is the method that failed, so make your future selfs life easier, by giving it a helpful name now.

What is a SUT?

SUT

or

System Under Test

is the term often used to indicate the focus of the test.

I always write it in full, never

sut.

We are always talking about making our code more readable, and then we go and use "sut" in our tests. Pfft

This is the thing we will "Arrange" for, then "Act" on, and ultimately "Assert" about, which leads nicely on to...

AAA

No this is not about Car breakdowns, or having had one too many Sherries (is that a thing? I have always been Tee-Total!).

It is about the test writing pattern "Arrange, Act & Assert"

-

Arrangeinputs and targets.- Arrange steps should set up the test case.

- Think, "Does the test require any dependencies setup?" such as an in-memory database?

- Handle all of these operations at the start of the test.

-

Acton the target behavior.- Act steps should cover the main thing to be tested.

- This could be calling a method, calling a REST API etc.

- There is likely to be only one line of code in this section of the test.

-

Assertexpected outcomes.- Assert steps verify the output of the Act stage.

- Sometimes it is checking an exception threw, or the correct value returned.

- Other times you may need to interrogate the System Under Test (SUT) to determine if it did what you expected

Read more about it here from the awesome people at Telerik JustMock (No sponsorship provided, but I can hope for a xmas present right?).

How many asserts

I have been asked (and asked myself) how many "Asserts" should there be in a single test.

My preferred answer to this is: As many as needed to PROVE that your test has succeeded. This maybe simply checking the return result of the method call, but it could be as complex as checking multiple tables in a DB have been modified, and all files have been removed, and a REST API was hit....

More often the number of asserts you have indicate the level that your test is at:

- One Assert ~= a single method call with a return result

- Multiple Asserts ~= Testing a complex path

As always:

MStest, NUnit, XUnit

There are different packages you can use to write and run tests. I have only ever used MSTest with dotnet.

The folks over at BrowserStack have written a good article on the differences: https://www.browserstack.com/guide/nunit-vs-xunit-vs-mstest

As you can see, they all do similar things, so I would say "Dealers choice", use what you are comfortable with and that your company already uses.

If you have to start with nothing, then NUnit seems to be the most popular, and most examples of tests "on the line" tend to use NUnit.

Public vs private testing

I am a big proponent of public interface testing.

However, that assumes your interface is easy enough to use, and is not hiding a big bloated ball of mud.

If you have a ball of mud, I would suggest writing as many tests as you can to the interface that touches as much of the code as possible.

Then you can start refactoring the ball of mud into smaller balls of mud, using Dependency Injection to maintain the functionality.

By refactoring even small parts of the original code, it WILL make testing of the refactored code and the original code easier.

However if your big ball of mud is so big and mutant like, then perhaps private testing is for you. This will require a different way to run your tests, probably as derived classes.

There are ways through

Reflection

but I do NOT recommend that approach.

Take the refactoring route, it will help you in the long run, and it is likely to be less painful.

InternalsVisibleTo

But wait, all my classes are

internal. I cannot access them from a

.Tests

project.

Firstly - Great!

Secondly - You have 3 options:

- Make your internal classes public (I don't recommend)

- Move your tests into the main code project (I don't recommend)

- Allow your test project to see the internals of the project using InternalsVisibleTo

This allows the

internalmethods in an Assembly to be visible to another Assembly. In this case your.Testsassembly.

You can do this, by adding this to your project file. This will allow a

.Tests

project of the same name as the assembly generated to be able to access the internal classes of your project.

<ItemGroup> <AssemblyAttribute Include="System.Runtime.CompilerServices.InternalsVisibleTo"> <_Parameter1>$(AssemblyName).Tests</_Parameter1> </AssemblyAttribute> </ItemGroup>

Note - You can define this

AssemblyAttributemultiple times if you want to access the internals in multiple projects!

What can it mean if it is hard to write a test?

If you are struggling to write a unit test, normally this would indicate that your code is too complex.

It may need a lot of dependencies with significant setup, or a lot of parameters passed to a method to drive it to the line of code you want to execute.

This generally indicates you have a design issue, and it may be easier to do some refactoring to help with testing.

It is amazing how much testing can be easier when you pull a single encapsulated functionality out to a separate entity.

Not only can the entity be tested in isolation, but you then don't need to worry about testing its fine details in the code that uses it.

Test Constructors

As you have seen in MSTest and NUnit etc, you can do

Setup

and

Tear Down

of testing data/environments in specially tagged methods.

You can also use a constructor of your test class.

I generally try and stay away from any setup/tear down methods. It becomes easy to muddy one test with another's setup.

Also, I like my tests to stand alone, and be runnable in Parallel, and this is much clearer if there is no shared code.

However they are very useful if used carefully.

If you got this far through my first NET Advent Calendar article, then thanks a lot, I appreciate your time.

Come say Hi on Twitter, if you are interested in learning more, then here are some great things to research. I particularly love Mutation testing.

Additional reading

- Unit Testing Best Practices

- Mutation testing with Stryker

- Fluent Assertions

- SpecFlow

- Playwright & Selenium

- Mocking

- DataTestMethod

- DataRow

- Snapshot Testing

- Unitverse

- AutoFixture

- Prevent http requests to external services in unit tests

Happy holidays everyone

Why Byte Arrays Are A Bad Idea When Dealing With Large Data

Hello, and welcome to my blog post for December 7th of the C# Advent Calendar 2022.

Organised by Matthew Groves & Calvin Allen.

I wont tell you about me, but if you are interested, check out my About me page.

Why Byte Arrays Are A Bad Idea When Dealing With Large Data

First off, I want to detail a win: The changes I am about to describe saved 5GB of RAM from a total of 6GB when dealing with a 350MB file.

Yes, you read that right! That is an 83% reduction in memory usage!

Second, let me put some context in place:

- The software I am talking about is responsible for reading a proprietary file format holding raw audio data & a bunch of headers per "frame".

- There can be hundreds of thousands of frames dependant on the length of the audio stream recorded.

Now lets look at an example of a 150min (2.5 hour) recording:

- The WAV formatted file on disk that generated this audio originally was 280MB.

- The software needed 5GB (yes that is not a typo! It says 5 GIGABYTES!!) of memory to process the proprietary file.

The difference in file size between the WAV and the proprietary files are not significant, say 10%, so why are we using 18 times the amount of memory to process a file?

Investigation

We deal with Mono and Stereo audio differently (we support additional channels too, but lets keep it simple), and "up-sample"(?) the Mono audio to Stereo, so that explains some of the difference.

So we can consider the original file size as a 600MB file to accommodate the up-sample.

Right, so now we are only using 8 times more memory than the file on the disk!

Ok, Ok, I hear you all saying But what has any of this got to do with the topic of the article?

Well for those who have not guessed, the data was being read into memory and manipulated there, using...

...Byte Arrays

!

"But why does that use so much RAM: the file is 600MB, the

Byte Array

should be the same size" --- Correct, it is!

Don't run away, I will explain...

We use

MemoryStream

to walk around the

Byte Array, to make it quick to access data within the array.

After much analysis of the code, it was determined, that multiple

MemoryStreams were created to walk around each channel (Mono has 1 channel, Stereo has 2) of audio data.

By default a

MemoryStream

will create a

Byte Array

as its back-end storage, this is ok, as it will grow to fit the data within it (caveat about this later).

int sizeOfLeftChannel = 5000; List<MemoryStream> channelContainer = new List<MemoryStream>(); MemoryStream leftChannel = new MemoryStream(sizeOfLeftChannel); channelContainer.Add(leftChannel);

So what do you think happens when you go:

MemoryStream leftChannel = new MemoryStream(sizeOfLeftChannel);

Correct! It creates a

Byte Array

in the background of the size

sizeOfLeftChannel.

Now if I do:

var leftBytes = channelContainer[0].ToArray();

What I now have is 2

Byte Arrays (the

ToArray()

created a new

Byte Array), both 5,000bytes (sizeOfLeftChannel) in size, thus doubling my memory allocation.

We were doing this in a lot of places: to focus on channels of data, and to walk through them quickly.

So now we know where some of our memory is going, and we can limit this with some code rework, however, it does not explain all the memory allocation.

Lets continue the investigation...

Turns out, we are using some third party components (I am not allowed to tell you which) to do specific audio encoding, and they would pull the file into RAM (exactly as we did), convert to a

MemoryStream, and then pull it out to a

Byte Array

to feed to the encoders.

These components were vital to the process, and cannot be changed, so lets check the documentation (as we always should) for any clues.

Turns out those encoders had alternative choices to encode (no clues yet what they are, all will be revealed soon!), thus reducing/removing the memory allocation.

Solution time

I have talked about what the problem is:

- Reading large amounts of data and manipulating it in memory.

- Which in hindsight is very obvious why we were consuming huge amounts of RAM!

But how can we resolve it?

We are reading data out and putting it into

MemoryStream

to consume it further.

Hang on a minute, there are other inbuilt

Streams in dotnet, can we use one of those?

Why yes, of course: FileStream!

We are reading from a file to process in a stream in memory, why don't we just switch to reading the file directly instead. All our memory problems will be solved.

Yes, that is true, reworking the code to deal with

FileStream

instead of

MemoryStream

reduced our memory consumption dramatically!